🦋 Glasswing #9 - Contextual Confidence and Generative AI

How does generative AI impact effective communication? We outline a framework to understand how AI challenges communication & what to do in response

Glasswing blogs are back! I have so much exciting content coming your way. Send your friends this blog if you think they may be interested in reading.

To get us started again, I have a new paper that I just released with some incredible co-authors! I think this paper is incredibly timely with the US Executive Order, Bletchley Declaration, G7 Code of Conduct all coming out in the past week acknowledging a global shift in AI policy.

There is a growing recognition of necessary actions to take in response to the challenges generative AI brings. My hope is that this paper will help guide policy leaders to integrate contextual confidence as a key framework in addressing these complex issues!

How does generative AI impact effective communication?

In a new paper co-authored with Zoë Hitzig (Harvard, OpenAI) & Pamela Mishkin (OpenAI), “Contextual Confidence and Generative AI”, we outline a framework to understand how AI challenges communication & what to do in response.

You can read the full paper here: https://arxiv.org/abs/2311.01193. If you think it is worth sharing this paper to your community, here is the Twitter thread and LinkedIn post.

In this post, I will provide a crude 1-2 minute read overview of the paper and urge you to read the paper in full if this seems interesting to you!

Let’s start by understanding what makes communication effective in the first place?

1. Our ability to identify context in an interaction (who, where, when, how, why).

With greater mutual confidence in their ability to identify context, participants are able to communicate more effectively. They can choose how and what to communicate based on their understanding of how their audience will understand the communicative commitments of both speaker and sender.

2. Understand how context is protected (scale, attribution, consent, etc.)

With greater confidence in the degree to which their communication is protected from reuse or repetition, participants can more appropriately calibrate what, if anything, they want to share in the first place. When all participants in an exchange have contextual confidence, communication is most efficient and meaningful. This principle mirrors Shannon’s source code theorem: messages can be encoded in fewer bits when the receiver will rely on context, as well as the message itself, for decompression.

In face-to-face communication, we rarely question our ability to identify important contextual markers (who, where, when, and how) of a communication. But as is increasingly and distressingly clear, on the internet you cannot take these for granted!

Effective communication depends on a shared understanding of norms that govern the sharing or reuse of information. For example, I say different things in an in-person meeting if it's “on-the record”. But often online, you can’t guarantee who exactly will hear what you have to say.

Since the launch of chatGPT, news articles and AI policy research outlines laundry lists of challenges that generative AI presents that are often treated as independent issues. We believe all of these challenges can be understood as challenges to contextual confidence !

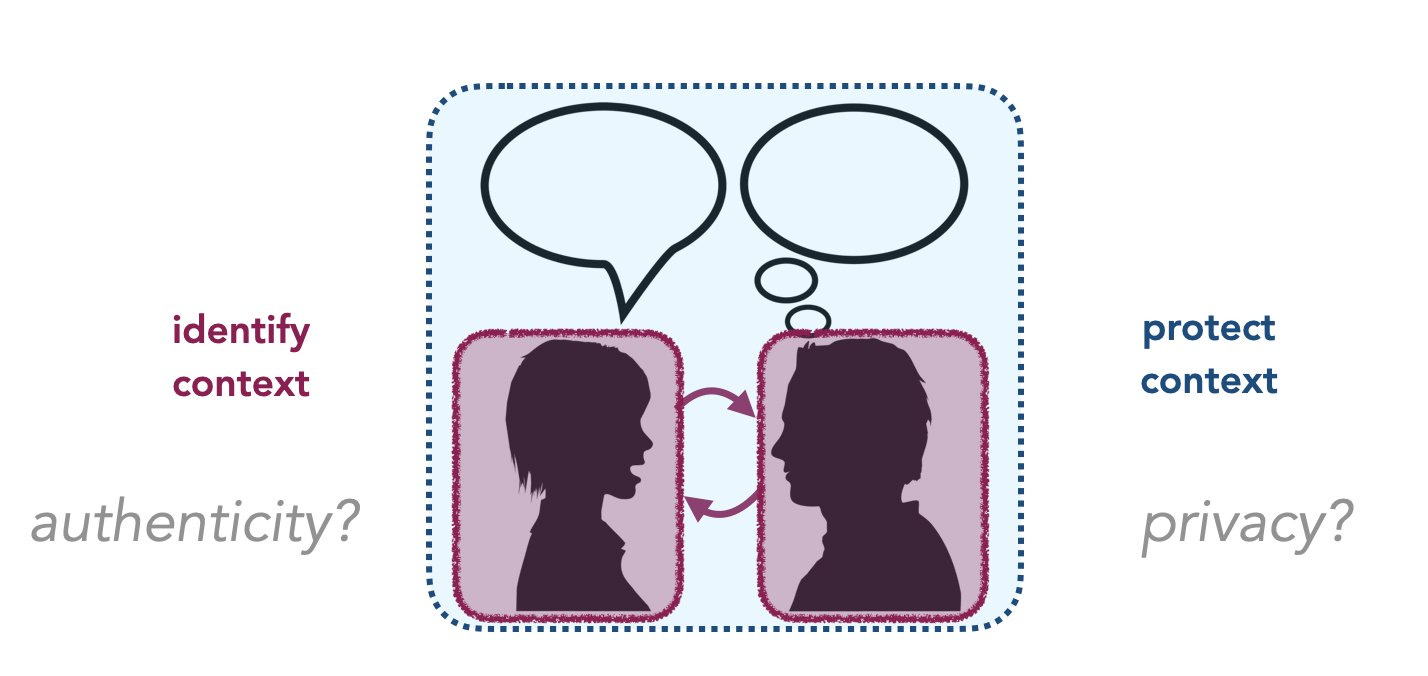

Without an ability to authenticate context, we can’t hold people accountable for protecting it. Without an ability to protect context, it may be useless to authenticate it. In the face of generative AI, authenticity and privacy cannot be treated as distinct issues. This inseparability of authenticity and privacy is what we call contextual confidence.

What is Contextual Confidence?

Contextual Confidence is a framework.

In settings with high contextual confidence, participants are able to both

1. identify the authentic context in which communication takes place, and

2. protect communication from reuse and recombination outside of intended contexts.

What is the purpose of the framework?

Understand challenges to communication that generative AI presents

Be able to prioritize what strategies we use to respond to these challenges.

Uncover new strategies that may otherwise remain hidden under the traditional privacy or information integrity lenses.

In the paper, we map out various different challenges to identifying and protecting context that generative AI presents in depth. We also present various different strategies that can be used to respond to each of these challenges as seen in the table below.

Some new strategies (ie., relational passwords or deniable messages) only emerge when viewing these challenges through the lens of contextual confidence & otherwise remain hidden under a privacy or information integrity lens.

How do we ensure norms are respected? We recommend:

1. Enforcement strategies focus on creating shared context awareness (e.g., agreeing to Terms of Service)

2. Accountability strategies hold individuals responsible for norm violations (e.g., litigation)

We hope this paper motivates prioritizing research & development in strategies enhancing contextual confidence! Existing frameworks for privacy and information integrity are limiting, but contextual confidence can guide risk mitigation without sacrificing utility.

There are also interesting extensions of this work we have started to think about related to model cards, evaluations, and responsible scaling policies. If any of that sounds interesting to you, reach out!

This post is a very crude summary of the 40 page paper and if you want to engage deeply with the material, I highly recommend you read the paper in full.

We thank Glen Weyl, Danielle Allen, Allison Stanger, Miles Brundage, Sarah Kreps, Karen Easterbrook, Tobin South, Christian Paquin, Daniel Silver and Saffron Huang for comments and conversations that improved the paper.